Evaluating Technology for Human Performance

Posted by Jordan Haggit, PhD on February 23, 2023

Contributors: Glenn Hodges, PhD & Kaleb Embaugh

Table of Contents

- A New Capability for Evaluation

- Evaluating People, Technology, & Work

- JADE Software Capabilities

- Learn More About JADE

- Subscribe to Our Blog!

A New Capability for Evaluation

Accelerated development of technology that supports work in high-stakes domains promises to enable better, faster, and cheaper performance. However, such promises are often based on the incorrect assumption that implementing more technology will ultimately lead to enhanced work outcomes. Instead, new technology often changes the nature of the work itself, along with the procedures, strategies, and challenges experienced by users (e.g., the Envisioned World Problem, Woods & Dekker, 2000).

Traditional evaluation approaches don’t consider how performance is enhanced or inhibited as new technology changes the conditions of work. This obscures the potential impact of new technology and may result in an implementation that is ineffective or negatively impacts performance. Before deciding whether or not to acquire, develop, or implement a new technology – or if one technology is more effective than another – the potential impact on critical decisions, workflows, and outcomes must be understood.

To keep pace with technological development, evaluations should be systematic, rigorous, and rapid. Evaluations should focus on how the new technology impacts key contributors to performance and should include:

- Authentic situations the technology will be used in

- Scenarios that include both prototypical and rare situations

- Consideration of individual, team, and organizational level impacts

- Performance metrics that align with identified cognitive challenges

Scenarios that simultaneously capture the complexity of the work domain and provide control over conditions of observation and performance assessment are a challenge to generate, but they ensure the situations of interest are authentic and effective. For example, one current approach is to passively observe how real work occurs using new technology. This has the benefit of allowing researchers to see unencumbered work play out over time without the influence of the researcher or designer. However, it does not provide any control over varying situations of interest and does not allow a researcher to assess the impact on performance. Another approach is to leverage laboratory tasks, which provide a high level of control and allow a researcher to systematically assess performance with new technology in different situations of interest. However, laboratory tasks typically lack authenticity, which does not support meaningful generalizations to real-world tasks.

To overcome these limitations and rapidly evaluate the impact of new technology, we created the Joint Activity Design and Evaluation (JADE) software. JADE is based on a “Staged-World” approach, which maintains the benefits of real-world complexity while also providing control over the conditions of observation.

Initially developed to study the cognitive and physiological performance of intelligence analysts, JADE is relevant to many other high-stakes domains where stakeholders need to understand how technology impacts their research, performance, or training objectives.

JADE enables users to:

- Study complex cognitive work

- Evaluate and develop training simulations

- Evaluate new or existing technologies (e.g. AI/ML algorithms)

Evaluating People, Technology, & Work

Many researchers and designers have benefited from using a staged-world approach to help understand the complexities of high-stakes work. However, developing staged world studies has traditionally required a high degree of domain expertise and resources to be effective. First, to design scenarios, subject matter experts (SMEs) must be heavily involved to provide their knowledge of relevant details, interactions, dependencies, and problem types. Scenarios will be ineffective if they don’t feel authentic, align too closely with well-known historic events, or too tightly constrain the possible available actions.

Second, staged worlds are typically done as “table-top” simulations. These require a significant amount of manual work to run because a facilitator must administer scenarios and embed challenge conditions. Others involved must capture performance events, store data, analyze data, or configure multiple software applications. This makes it difficult to maintain the same experience across participants, which can reduce data quality and be problematic when conducting systematic evaluations.

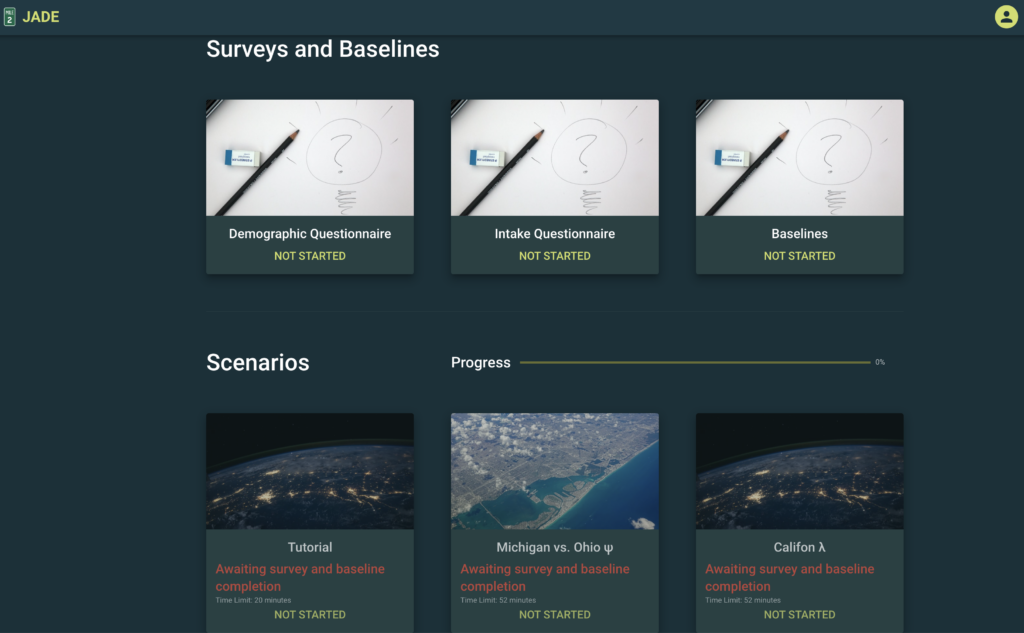

JADE Software Capabilities

With these challenges in mind, we developed JADE as a way to help decision-makers understand and evaluate the performance implications of cognitive work, new or existing technological interventions, and training approaches.

JADE is lightweight, portable, and designed so that any user will have the same experience regardless of time and location. This allows users to gain key insights that support stakeholder decision-making regarding investments in training approaches, acquisitions, or research and development.

JADE includes the following capabilities and benefits:

- Administering scenarios – Participants are presented with a series of scenarios that are intended to elicit targeted responses. For intelligence analysts, this includes a library of scenarios that vary in difficulty and type of challenge (e.g., time pressure, misleading information, ambiguous information). Researchers can also decide when to change the dynamic of the scenario by injecting new information, which enables researchers to better understand decision-making.

- Capturing and storing performance data – JADE is configured to capture and store all related participant data during a scenario. This includes any demographic and behavioral questionnaires administered before or after scenarios, and physiological data, such as heart rate, heart rate variability, eye-tracking, and voice data. These data can help researchers understand the relationship between behavioral, physiological, and performance data.

- Data analysis – All data collected can be output in different formats or analyzed within the software. This allows researchers to begin analyzing data to understand performance immediately following a scenario. This also strengthens the ability to debrief with participants to understand decision-making by targeting questions based on their actual decisions and performance in a given scenario.

- Integrates with high-side software applications – JADE can integrate into classified networks as some evaluations may be necessary to connect to classified networks and software applications. This provides an additional level of real-world validity and overcomes a challenge faced by many commercial-off-the-shelf (COTS) software.

JADE allows us to study a broad set of situations across many different domains. JADE can drastically reduce the cost of conducting staged world studies by enabling researchers to plug-and-play content, scenarios, and analyses that best address their research and training objectives. The flexibility and ease of use of JADE enable rapid technology evaluation and knowledge worker growth through the deliberate practice of decision-making to improve performance.

Learn More About JADE

Interested in learning more about our JADE tool? You can view a demo HERE or email the project lead, Kaleb Embaugh at kembaugh@miletwo.us.